Warning: Undefined array key "HTTP_REFERER" in

/www/wwwroot/prod/www.enjoyasp.net/wp-content/plugins/google-highlight/google-hilite.php on line

58

正如”随机数的生成过程非常重要因此我们不能对其视而不见”(引自Robert R -橡树岭国家实验室),我们希望占用您一点点宝贵的时间在基于SQL Server MVP Jeff Moden的 成果的基础上完成这项工作。对于使用SQL来产生随机数来说,我们会重点讲解从均匀分布随机数(non-uniformly distributed random numbers)的基础上生成非均匀分布随机数(uniformly distributed random numbers);包括一些统计分布的基础来帮你起步。

正如我们所知,随机数在仿真中非常重要(尤其是蒙特卡洛仿真法),还有随机数在密码学以及其它高科技领域中也扮演了同样重要的角色。除此之外在我们的SQL Server中有时也需要产生大量的随机数据来测试SQL的性能。

因为我并不是统计学家,因此我们这里仅仅来看用SQL生成并且能显而易见的看出其随机性的的随机数字,而并不会深入到数学原理来看这个随机性是真正的“随机”还是“貌似随机”我们的意图是文章中算法的正确性以及这个算法在非关键领域是否足够使用。

通常来说,由均匀随机数转换成非均匀随机数的技术是将均匀随机数乘以累计分布函数(CDF)对于目标数据的反转。但在实践中,累计分布函数是否针对特定分布存在有效哪怕是接近的函数并不好估计。但幸运的是,比我们聪明许多的那帮家伙已经分析过了多个领域的多种分布函数我们可以直接拿来使用,这些函数可以满足我们的大多数需求。

测试工具

在我们的测试中,我们采用标准的SQL技术来使用伪URN(均匀分布随机数)函数生成Float类型的参数传给转换函数.,我们将使用标量(Scalar)函数包括SCHEMABINDING关键字解决性能问题然而,或许你还想使用同等的表值函数来测试性能是否还可以进一步提升。首先,来生成测试数据。

-- Data definition and setup

DECLARE @NumberOfRNs INT

,@Lambda FLOAT -- For the Poisson NURNs

,@GaussianMean FLOAT -- For the Normal NURNs

,@GaussianSTDEV FLOAT

,@LambdaEXP FLOAT -- For the Exponential NURNs

,@WeibullAlpha FLOAT -- For the Weibull NURNs

,@WeibullBeta FLOAT

,@Laplaceu FLOAT -- For the Laplace NURNs

,@Laplaceb FLOAT

SELECT @NumberOfRNs = 10000

,@Lambda = 4.0 -- Lambda for the Poisson Distribution

,@GaussianMean = 5 -- Mean for the Normal Distribution

,@GaussianSTDEV = 1.5 -- Standard Deviation for the Normal Distribution

,@LambdaEXP = 1.5 -- Lambda for the Exponential Distribution

,@WeibullAlpha = 1.0 -- Alpha (scale) for the Weibull Distribution

,@WeibullBeta = 1.5 -- Beta (shape) for the Weibull Distribution

,@Laplaceu = 4.0 -- Mu (location) for the Laplace Distribution

,@Laplaceb = 1.0 -- Beta (scale) for the Laplace Distribution

--CREATE TYPE Distribution AS TABLE (EventID INT, EventProb FLOAT, CumProb FLOAT)

DECLARE @Binomial AS Distribution

,@DUniform AS Distribution

,@Multinomial AS Distribution

-- Simulate a coin toss with a Binomial Distribution

INSERT INTO @Binomial

SELECT 0, 0.5, 0.5 UNION ALL SELECT 1, 0.5, 1.0

-- Events returned by this Discrete Uniform distribution are the 6

-- Fibonacci numbers starting with the second occurrence of 1

INSERT INTO @DUniform

SELECT 1, 1./6., 1./6. UNION ALL SELECT 2, 1./6., 2./6.

UNION ALL SELECT 3, 1./6., 3./6. UNION ALL SELECT 5, 1./6., 4./6.

UNION ALL SELECT 8, 1./6., 5./6. UNION ALL SELECT 13, 1./6., 1.

-- Events returned by this Multinomial distribution are the 5

-- Mersenne primes discovered in 1952 by Raphael M. Robinson

INSERT INTO @Multinomial

SELECT 521, .10, .10 UNION ALL SELECT 607, .25, .35 UNION ALL SELECT 1279, .30, .65

UNION ALL SELECT 2203, .15, .80 UNION ALL SELECT 2281, .2, 1.

(译者注:我将@NumberOfRNs 参数由100W改成1W,因为100W在测试中占用的时间过长,如果你喜欢,可以随意改动。)

下面是测试工具,为了我们期望的目标分布生成NURNs(非均匀分布随机数):

-- Create random numbers for the selected distributions

SELECT TOP (@NumberOfRNs)

RandomUniform = URN

--,RandomPoisson = dbo.RN_POISSON(@Lambda, URN)

,RandomBinomial = dbo.RN_MULTINOMIAL(@Binomial, URN)

,RandomDUniform = dbo.RN_MULTINOMIAL(@DUniform, URN)

,RandomMultinomial = dbo.RN_MULTINOMIAL(@Multinomial, URN)

,RandomNormal = dbo.RN_NORMAL(@GaussianMean, @GaussianSTDEV, URN, RAND(CHECKSUM(NEWID())))

,RandomExponential = dbo.RN_EXPONENTIAL(@LambdaEXP, URN)

,RandomWeibull = dbo.RN_WEIBULL(@WeibullAlpha, @WeibullBeta, URN)

,RandomLaplace = dbo.RN_LAPLACE(@Laplaceu, @Laplaceb, URN)

INTO #MyRandomNumbers

FROM sys.all_columns a1 CROSS APPLY sys.all_columns a2

CROSS APPLY (SELECT RAND(CHECKSUM(NEWID()))) URN(URN)

接下来,我们将会逐个介绍每种分布类型,但是在此之前,首先阐述一下关于我们的测试工具你可能会问到的问题:

-

生成高斯分布NURN的算法需要两个URN

-

我们使用RN_MULTINOMIAL 函数来生成三种不同的分布类型,因为其比另外两种更加通用(会在文章后面详细解释)

-

在创建函数RN_MULTINOMIAL之前,首先需要创建自定义表类型。自定义表类型是在SQL Server 2008引入的,因此在SQL Server 2005中你不能使用这种方式,另一种替代方式是使用XML

-

我已经在文章附件中附加上了脚本,你首先运行Function.sql,然后运行NURNs.sql。生成100W的数据大概需要4.5分钟(译者注:在我的笔记本上跑了2.5分钟)

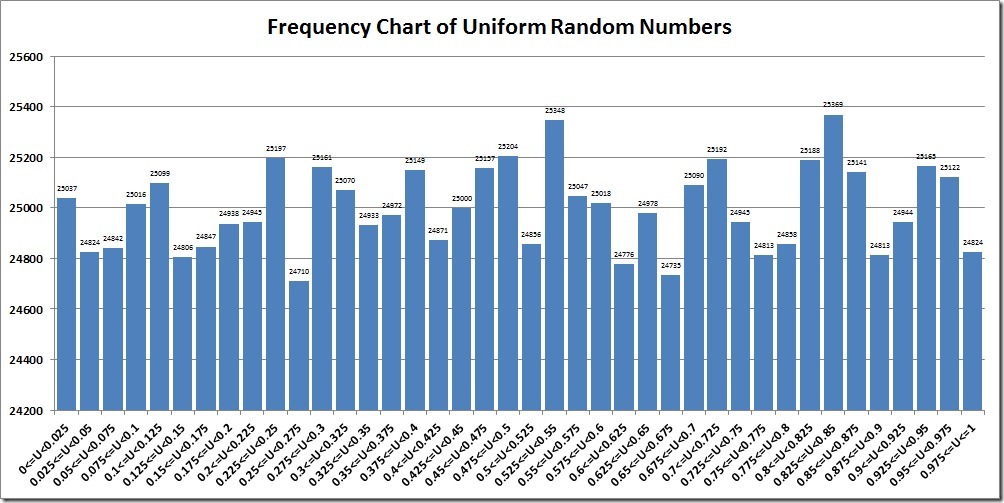

均匀分布随机数(Uniform Random Numbers) —–怎么个均匀法?

因为接下来我们所有的数据分布都是基于均匀随机数之上,所以我们来看一下这些由标准SQL生成的随机数是怎么个均匀法。如果这些所谓的“均匀数”如果并不是那么”均匀”的话,那或多或少会对我们后面的结果产生影响。

在{0,1}之间的URN概率很简单,就是0.5。对于这一区间的方差则为1/12。对于使用SQL SERVER内置的AVG()和VAR()函数来汇总我们生成的100W条数据。结果和我们期望的差不多。

PopulationMean |

PopulationVAR |

SampleMean |

SampleVAR |

4 |

0.083333 |

0.499650832838227 |

0.0832923192890653 |

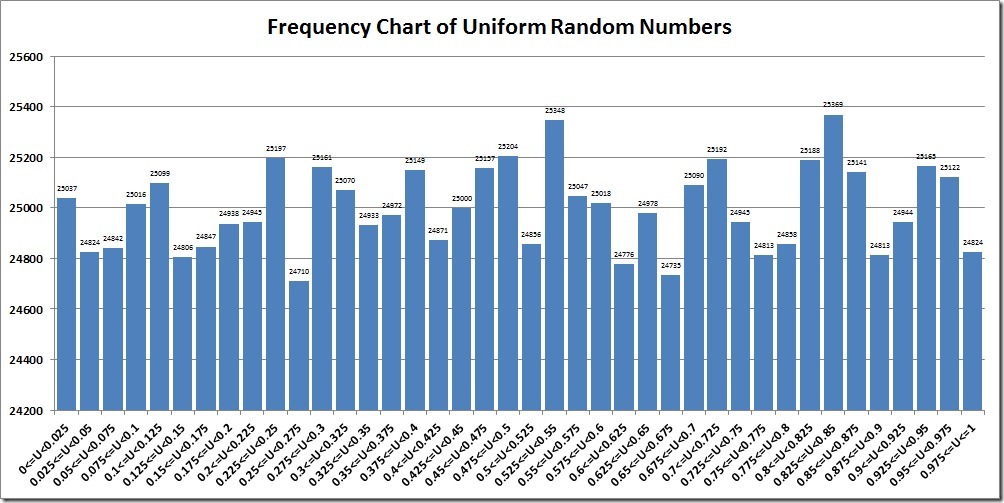

下面的柱状图可以清晰的看到对于我们预定义区间的数据的分布:

正如我们所看到的结果,虽然结果并不是非常的“标准”但是已经足够对于我们这种并不需要那么精确的测试了。这里需要注意的是,我经过多次测试选择的上述结果,并没有特别的选取某个结果,如果你自己跑一遍SQL,你会发现你得到的结果也差不多。

多项式随机数

在我们开始讨论多项分布之前,我们首先看一下其它两种类型的离散分布。

人们所熟知的抛硬币概率其实就是柏松分布。这种用来表示二选一(正面或者反面)发生的概率,返回0或者1(或是其它某个数)来表示事件是否发生(也可以理 解成“成功”或”失败”)。当然了,就抛硬币而言出现正面和反面概率是对等的,都是50%。但是柏松分布也允许其它非50%的概率,毕竟人生不总是那么公 平嘛。

离散均匀分布则描述了多于2个事件,比如说仍骰子,每一面出现的概率都是相同的。当然,这也是和我们生成给定范围内随机整数的方法是类似的。Jeff Moden曾经在这里给出过描述。

多项式随机数比上述两种分布类型还要宽泛,它模拟了在一系列事件中每一个单独事件发生的概率并不相同的情况。

记住,我们的事件并不必要是一系列简单数字的集合,比如0,1,2,3。还可以是任何数字(包括负数)的集合.比如,在我们的多项式分布中我们选择了由Raphael M. Robinson在1952年发现的梅森尼质数,对于我们的离散均匀分布来说,我们采用了以1为开始的斐波纳契数列的前6个数。我们还可以通过修改用户自定义表类型Distribution的EventID列由INT改为FLOAT来将事件改为FLOAT类型。

现在我们来看上面我们所设立用于测试的三个表变量,我们可以发现:

-

@Binomial定义了两个事件(0,1),事件发生的概率P为50%,事件不发生的概率为1-p

-

@DUniform定义了6个事件(1, 2, 3, 5, 8, 13),就像扔骰子一样,每一个事件的概率都是1/6

-

@Multinomial定义了5个事件(521, 607, 1279, 2203, 2281),每一个事件发生的概率各不同

对于我们测试的每一种分布,我们都进行了大量的测试结果后概率百分比如下表:

RandomBinomial BinomialFreq EventProb ActPercentOfEvents

0 499778 0.5 0.499778000000

1 500222 0.5 0.500222000000

RandomDUniform DUniformFreq EventProb ActPercentOfEvents

1 166288 0.166666 0.166288000000

2 166620 0.166666 0.166620000000

3 166870 0.166666 0.166870000000

5 166539 0.166666 0.166539000000

8 166693 0.166666 0.166693000000

13 166990 0.166666 0.166990000000

RandomMultinomial MultinomialFreq EventProb ActPercentOfEvents

521 99719 0.1 0.099719000000

607 249706 0.25 0.249706000000

1279 300376 0.3 0.300376000000

2203 149633 0.15 0.149633000000

2281 200566 0.2 0.200566000000

通过上面的表格不难发现,这个概率和我们所期望的概率基本吻合。

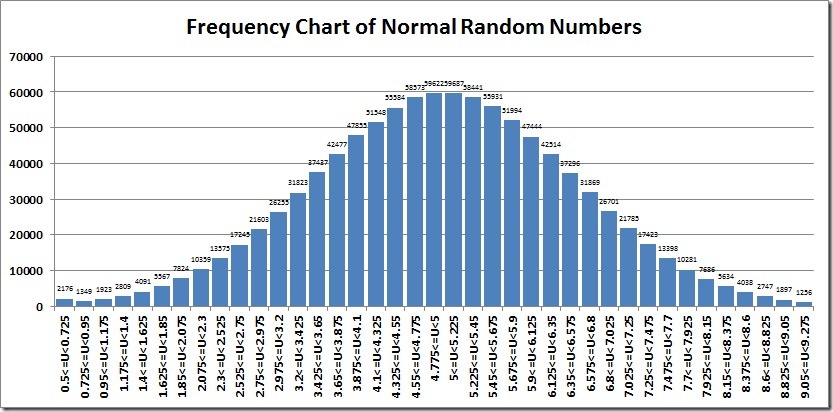

高斯(或正态)分布随机数

在最近的讨论中,SSC论坛的会员GPO发帖询问基于高斯(正态)分布生成随机数的问题。所以我开始研究这个问题(这其实也是本篇文章的灵感来源)并使用了博克斯·马勒变换方法,基于我的RN_GAUSSIAN函数,你可以忽视我在这个帖子中对于如何利用URN生成NURN的算法.

高斯分布是一个连续分布,对于熟悉这种分布需要我们一点点解释,它经常采用平均数附近的“标准”样本进行分析。

出了平均数之外,还必须指定标准偏差。下面的函数帮助我们认识能够帮我们了解示例总体数据正态分布的形状。

首先我们先来比较总体(期望)平均数和方差与示例平均数和方差的比较来看这两者之间是否接近。

PopulationMean PopulationSTDEV SampleMean SampleSTDEV

5 1.5 5.00049965700704 1.50020497041145

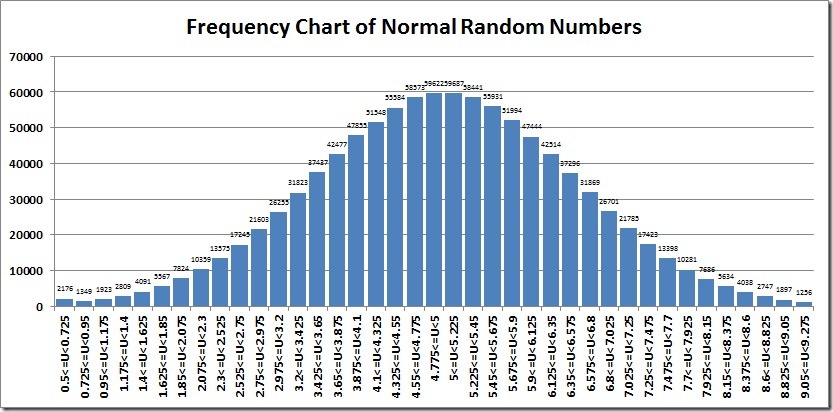

然后,我们再来看图表。图中的间隔是加上或者减去3个平均数的标准差.

读者如果熟悉”正态”分布就会体会到这张图是多么的”标准(正态)”,我们还认识到1,000,000中有998,596(99.86%)在我们的3个平均数的标准差之内。这也是我们所期望的结果。

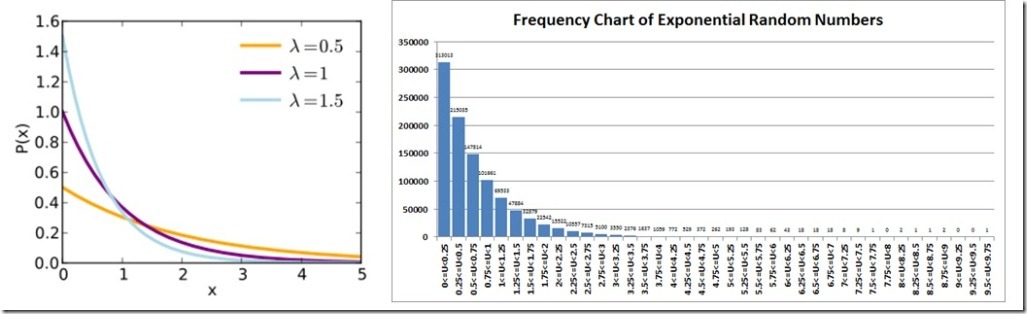

指数随机数

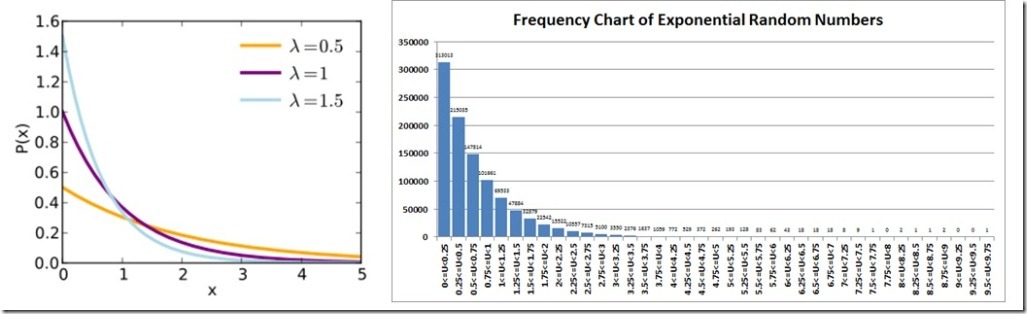

指数随机分布是可以用CDF(累计分布函数)进行分布,并且可以很容易的用接近的表达式表达出来的分布。指数随机被应用于物理学,水理学,可靠性,等待时间等候论领域。

首先要知道总体平均数是1/Lambda,标准方差是1/Lambda的平方,我们可以看到我们的总体平均数和方差和示例数据十分接近。

PopulationMean PopulationVAR SampleMean SampleVAR

0.6667 0.4444 0.666 0.4444

我们可以看由维基百科上提供的概率密度曲线,当Lambda取值为1.5时(蓝色的线)和我们的数据比较非常相似。

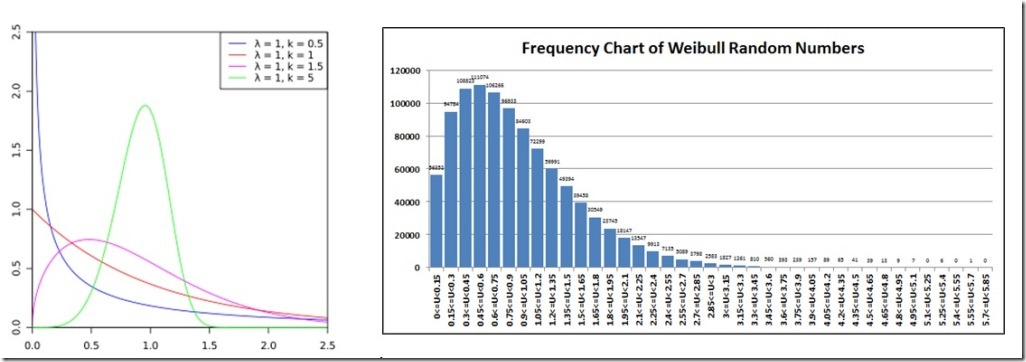

韦伯分布随机数

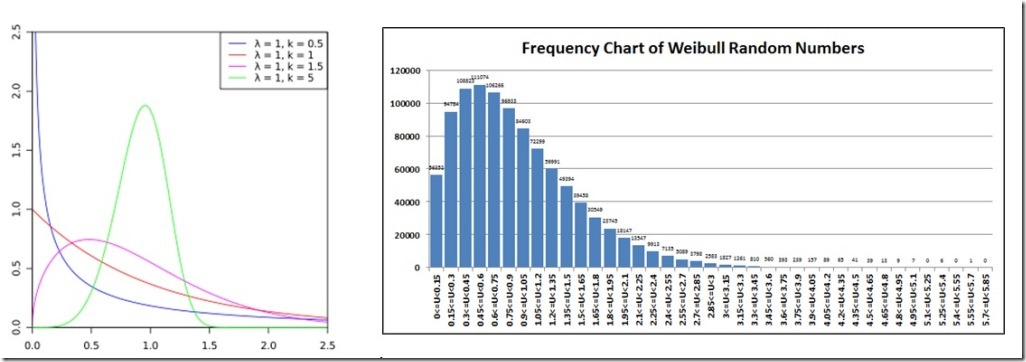

在大学中对韦伯分布已经小有研究,按照我的理解韦伯分布也是十分规律的,所以非常适合我们在这里生成非均匀随机数(NURN).韦伯分布在很多统计和工程领域都有应用,包括天气预报,保险,水理学,存活分析,可靠性工程(我教这门课的大学教授一定会为我骄傲的)以及其它领域。

生成韦伯分布的公式我们可以在维基百科找到。而在我们这里实现这个方式的RN_WEIBULL函数实现起来也非常简单。两个参数分别为形状和尺度参数(@WeibullAlpha,@WeibullBeta)

韦伯分布的并不是那么容易计算,因为表达式使用了伽马分布。下面是我们使用了形状参数为1.0尺度参数为1.5的图形,并与维基百科提供的图形进行了对比。

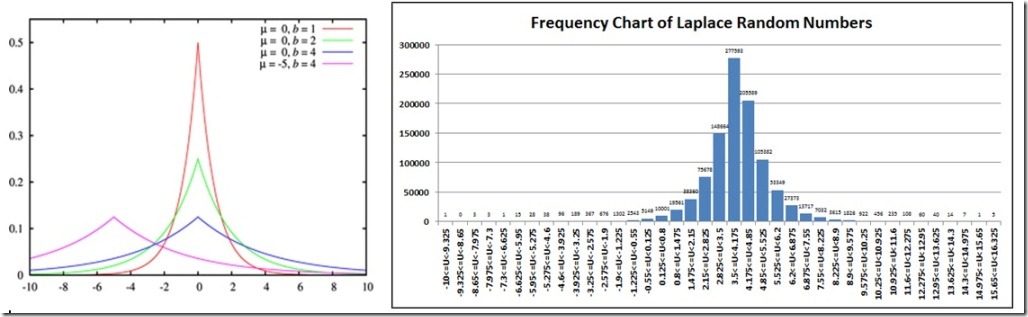

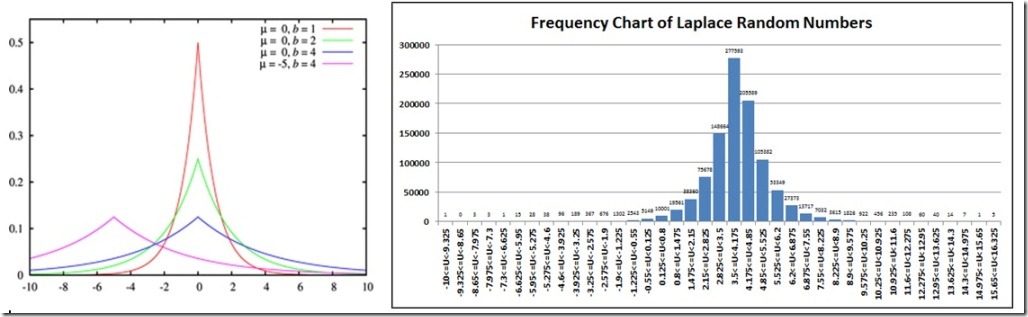

拉普拉斯随机数

或许是因为我是一个geek,还是由于我们大学教这门课的老师非常牛逼。我非常喜欢拉普拉斯变换这门课。当我知道拉普拉斯还发明了拉普拉斯统分布时,我将这种分布加入到本文中来表达对拉普拉斯的敬意。

拉普拉斯分布是一种连续分布,所幸的是,它的累计分布函数(CDF)非常简单,在我们的函数中一个是位置参数,一个是尺度参数。

拉普拉斯分布有着很容易计算的平均数(@u)和标准方差(2*@b^2)所以我们再一次将我们的总体样本数据和示例数据进行比较。

PopulationMean PopulationVAR SampleMean SampleVAR

4 2 4.0009 1.9975

我们再一次使用我们的数据和维基百科提供的数据分布图进行比较。在这里我们取@b=4(图中红线)

总结

从本文的研究中我们得出结论,生成的非均匀随机数基本是正确的,起码和维基百科提供的数据比来说是正确的。此外我们还发现总体平均数和方差的对应关系。我们所有示例数据都在附件的EXCEL中。

在自然界,人类和其它领域,随机是无所不在的。而工具模拟了这些随机性帮助我们在这个混乱的世界中找到规律。

“创新其实就是在充满不确定的自然界中引入规律”—- Eric Hoffer

科学和工程的研究往往要使用随机数来模拟自然界的现象。我希望我们的研究可以对这些领域的人有所帮助。

对于那些好奇为什么我们不用柏松分布来产生非均匀随机数的人。因为在SQL的内置函数中不允许我们生成多个随机数(不使用NEWID() 和 RAND()是因为它们有“副作用”),我们将继续寻找在SQL Server中生成随机数更好的办法。

本文阐述了在统计学中普遍存在的Alpha, Beta, Gamma和F分布。但是在生成非均匀随机数背后的数学原理更加复杂,所以有兴趣的高端读者可以自行查找资料。

我希望感谢那些勇猛无畏把本文读完的读者,尤其是那些一路跟着思考的读者。谢谢它们对于非均匀随机数的兴趣和我们对于这个领域能提供更有用的例子。

参考:http://www.cnblogs.com/CareySon/archive/2012/07/11/GenerateNURNsUsingSQLServer.html